The report below was generated over the first half of 2022 for GRYFN, with myself in charge of most of the undertaking. Over the course of writing this report, I learned GIS concepts, as well as new Unmanned Aerial System data processing platforms like ESRI Drone2Map, and Open Drone Map. I also had to expand my knowledge on Pix4D, and ArcGIS Pro. This all included researching several settings, workflows, and topics. On top of this, there was a large amount of trial and error throughout the project that I learned to generate a fair report. The report below has been edited and redacted so as not to release metrics deemed important to GRYFN. This post is intended to showcase a large project I was tasked with during my time with GRYFN.

Introduction

This report aims to discuss the findings of running a multistage processing comparison test of several Unmanned Aerial System (UAS) data processing programs. Each program was tested for the quantitative and qualitative analysis of orthomosaic outputs. This included what data could be input into the software, what settings worked best for image reconstruction, the total time it took to produce results, and a comparison of final outputs. When working at scale with UAS operations, it is important to understand what programs operate best under which conditions and how much time can be saved by utilizing one over another.

UAVs, Sensors, and Equipment

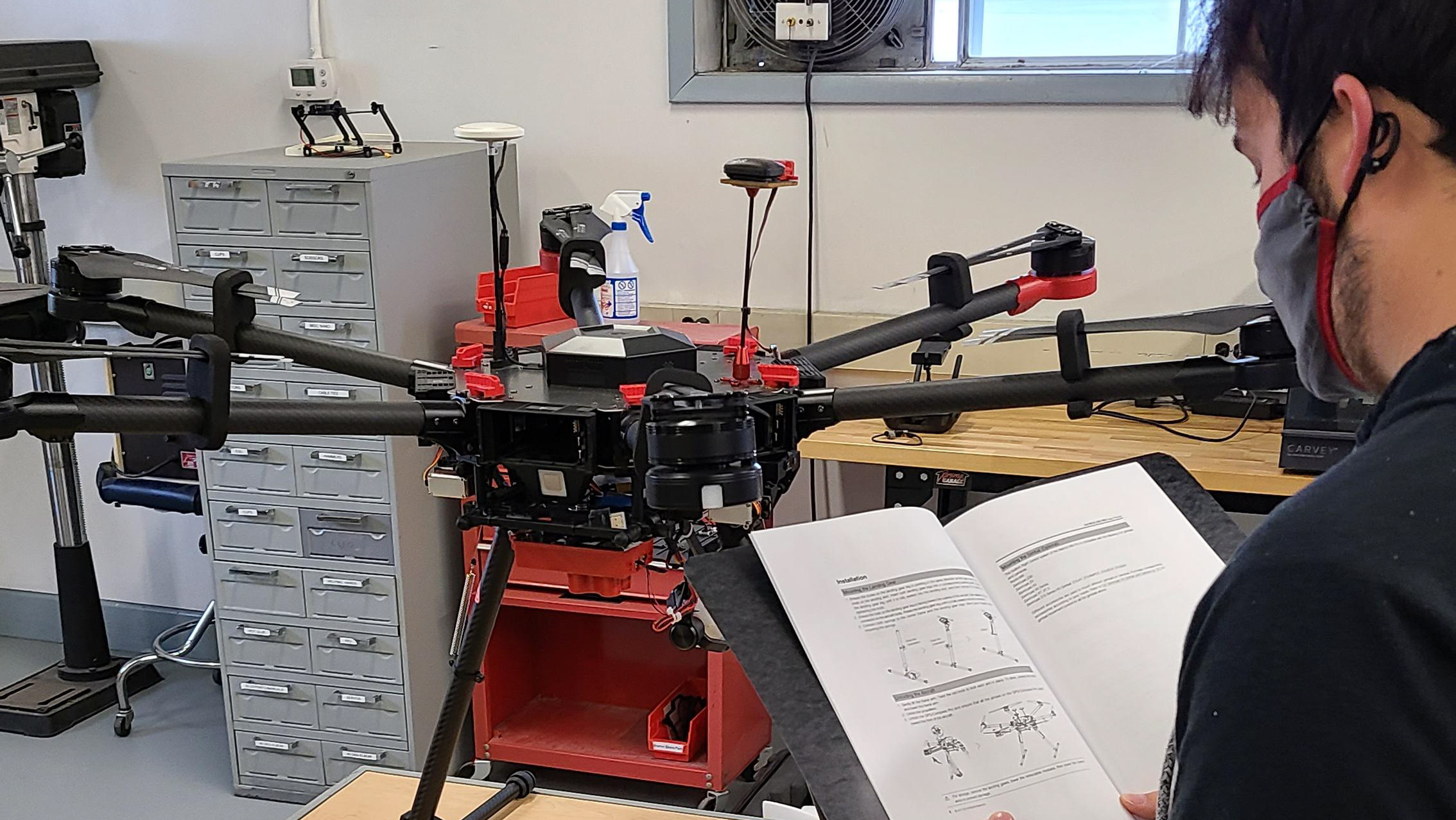

Data for this comparison was collected using three different UAVs, two DJI Matrice 600 Pros, and a DJI Mavic Air 2. Both DJI Matrice 600’s were equipped with a Sony A7R3 RGB camera with 35mm lens, a Velodyne LiDAR sensor, an Applanix APX15 GNSS device, and an unused Headwall Nano VNIR sensor. The Mavic Air 2 utilized only its integrated Hasselblad RGB camera. In this report's processing comparison section, the evaluated outputs will include notes about the UAV platform used to collect that data set. GCPs were used in select datasets only, and the type used were large black and white squares, and their actual positions were measured using a Trimble R10 GPS.

Datasets

This report looked at the generated orthomosaics from four different datasets. Dataset A being of a building and drainage basin at Purdue University’s Agronomy Center for Research and Education (ACRE) in West Lafayette, IN, captured on March 2nd, 2022, by a DJI Matrice 600. Dataset B being of ACRE in West Lafayette, IN, captured on March 2nd, 2022, by a DJI Mavic Air 2. Datasets A and B were the only ones to include GCPs. Dataset C was of a corn field in Indiana, captured on June 3rd, 2021, by one of the DJI Matrice 600. Dataset D was a cornfield in Illinois, captured on August 4th, 2021, by one of the DJI Matrice 600. Dataset D also combines three separate flights by the same UAV due to the larger area covered. All datasets were captured in the solar noon window to limit shadows in the collected images.

Software

Software Platforms

The following software programs were tested during the course of this evaluation: GRYFN Processing Tool, Pix4D Mapper, ESRI Drone2Map, and OpenDroneMap. The GRYFN Processing Tool is a software program produced by a company called GRYFN. It supports data types, including RGB, VNIR, LiDAR, GNSS, and SWIR. It utilizes a two-step process of first setting up to “bundle” all relevant collected data, a system calibration file unique to each Unmanned Aerial Vehicle (UAV), and an optional processing extent file in JSON or GEOJSON format. Once all data is bundled into a GRAW folder, you can use that data in a processing pipeline to generate outputs. The software does come with some pipeline templates, but you can create your own so long as you understand your UAV's specifications and the flight metadata conducted. For this testing RGB and LiDAR pipelines were utilized.

Pix4D Mapper is the general use UAS data processing program from the company Pix4D. While there are multiple iterations of the software, Mapper is the most general of the options and was determined to be best for this testing. Mapper begins by collecting all images from a flight, in JPEG and/or TIFF formats. Then it allows you to input information about the image coordinate system, geolocation file for images, and import a custom camera model. From there options are presented about the type of template you’d like to generate products including, Standard 3D Maps, Standard 3D Models, and Ag Multispectral. For this testing the Standard 3D Maps processing template was utilized. Mapper then opens into the processing portion of the program, and behind some different sub-menus there does exist the ability to add data for things like Ground Control Points (GCPs).

Drone2Map is a UAS data processing program from ESRI. The main propositional point for using this software is its directed compatibility for working with ESRI ArcGIS products. The software starts by having you select a project template, and for this testing we used 2D Full. It then asks you to import images, a GPS file, and set the GPS coordinate system. The GPS file was prepared using the format image name, X/Easting, Y/Northing, Z, omega, phi, kappa. From there it loads into the processing portion of the software and provides a variety of options including importing custom camera models, JSON or GEOSON files for clipping the processing area, and GCPs.

Open Drone Map (ODM) is an open-source program for UAS data processing. With a few varieties of the application available, ODM console is the original community product and was selected for this testing. ODM console is entirely controlled through a command prompt-style terminal. A project folder containing an images folder is the minimum setup required. In the project folder, you can include an image geolocation file and JSON or GEOSON file to be used as a processing extent. Upon startup, you are given a lengthy list of all possible commands that could be added to your script to run, with short explanations for what each option did.

Software Settings

After a variety of initial testing to determine what would lead to the best orthomosaic output possible from each software, we established the settings that would be utilized for each software. GRYFN Processing Tool has a noticeable requirement for a handmade camera model (system calibration file), while all other programs have an auto-detecting camera model. While the camera model created for the GRYFN Processing Tool could be amended to be imported by the other programs, it was found that the auto-detected camera models created by each program were deemed more accurate by each program. This is not to say that the auto-detected ones were better in all cases, but that the models that were auto-generated were designed for each software, similar to how the handmade models were optimized for GRYFN’s needs. GRYFN Processing Tool was also the only program that could use LiDAR data to create the point cloud that is used as a basis for reconstructing an orthomosaic. Because of this, it was opted to test GRYFN’s RGB processing both with and without LiDAR.

Pix4D Mapper was tested using the Standard 3D Maps processing template. It was provided a geolocation file for images used, GCPs were added when applicable, and all three steps of processing were enabled (initial processing, point cloud, and mesh, DSM, and orthomosaic). While a shape file can be imported for other 3D projects in Mapper to create a boundary for processing, orthomosaics do not have the ability, which is intriguing as all other programs were able to import a file to set a boundary for orthomosaic generation.

Drone2Map was tested using the 2D Full processing template. All steps of processing enabled by default remained enabled during testing. Drone2Map required a geolocation file to process the images. The program was always given a file to enable the “crop” feature, which sets a boundary for orthomosaic generation. GCP data was provided when applicable, however, the program would, at times, not accept the provided file as it deemed there to be no images within the boundary.

ODM was given an image geolocation file and GEOJSON file for its boundary command. Other commands consistently used were time, dsm resolution, orthomosaic resolution, and use hybrid bundle adjustment. ODM also has a command called fast orthophoto which skips point cloud densification to try and speed up the process, this was utilized in several cases for determining how much quality was lost for the time savings offered. For data sets, the program had difficulty matching photos, the command feature quality lowest was utilized to try and force the software to match images for reconstruction that have fewer overall matching features.

Questions Answered

Here we will discuss some of the questions posed to me to have answered by this project. The purpose of this report was to compare the processing times and quality of orthomosaic outputs of several UAS processing programs. For GRYFN, I provided screenshots for each orthomosaic with my analysis of the qualitative qualities, tables and charts showcasing processing times and level of geospatial accuracy where applicable. The analysis and screenshots helped me, and others identify the visibility of seam lines, artifacts, and the accuracy of reconstruction. Alongside these direct questions, I could present answers to other relevant questions.

These other questions included identifying how long it took each software to import photos, and the amount of time it takes to set up processing. Another qualitative metric to analyze from this project was the output file sizes of each processing program. This is important to identify as a business that conducts hundreds of UAS operations in a year; the smaller the outputs, the less money needs to be spent on storage space. And following all of this up is the ease-of-use factor for each program. Often, we see companies choosing to process data by other staff, and not the UAS operators. How difficult it may be to teach someone one of these programs or how easy it is to create a consistent workflow can influence a company's choice of program.

Conclusion

This project and finalized report offered GRYFN evidence on how their processing program compared to several competitors, identifying both where GRYFN was better than competitors and where there was room for improvement. But most importantly, identifying where improvements could be made allows the company to refocus certain efforts and work more efficiently to develop a better product. This report also identified certain flaws and oddities about the other programs which differentiated the GRYFN solution. Of course, here in 2023 some of this information is outdated, which would call upon the eventual need for a new comparison. However, with this foundational report done, the next one can be done faster and better, and I’m happy to have had the opportunity to take on this project and write the foundational report for GRYFN.